Biologically-Informed Hybrid Membership Inference Attacks on Generative Genomic Models

The public sharing of data, methodologies and discoveries within the scientific community is one of the main factors that has, over centuries, enabled large-scale and international scientific collaborations to achieve formidable research results [1]. A prime example comes from the incredible amounts of genomic data generated as biproduct of the Big Biological Data era, which has led to outstanding discoveries in personalized medicine and biomedical research [2].

Such unprecedented availability of sensitive data, however, quickly raised new concerns around Genomic Privacy and what it entails for the public sharing of genomes, spreading worry amongst the scientific community [3]. In the past decade, synthetic data has come forward as a promising solution to this ethical and legal dilemma, as it could mitigate the legal issues surrounding sensitive data sharing by eliminating the exposure of real individuals’ information [4].

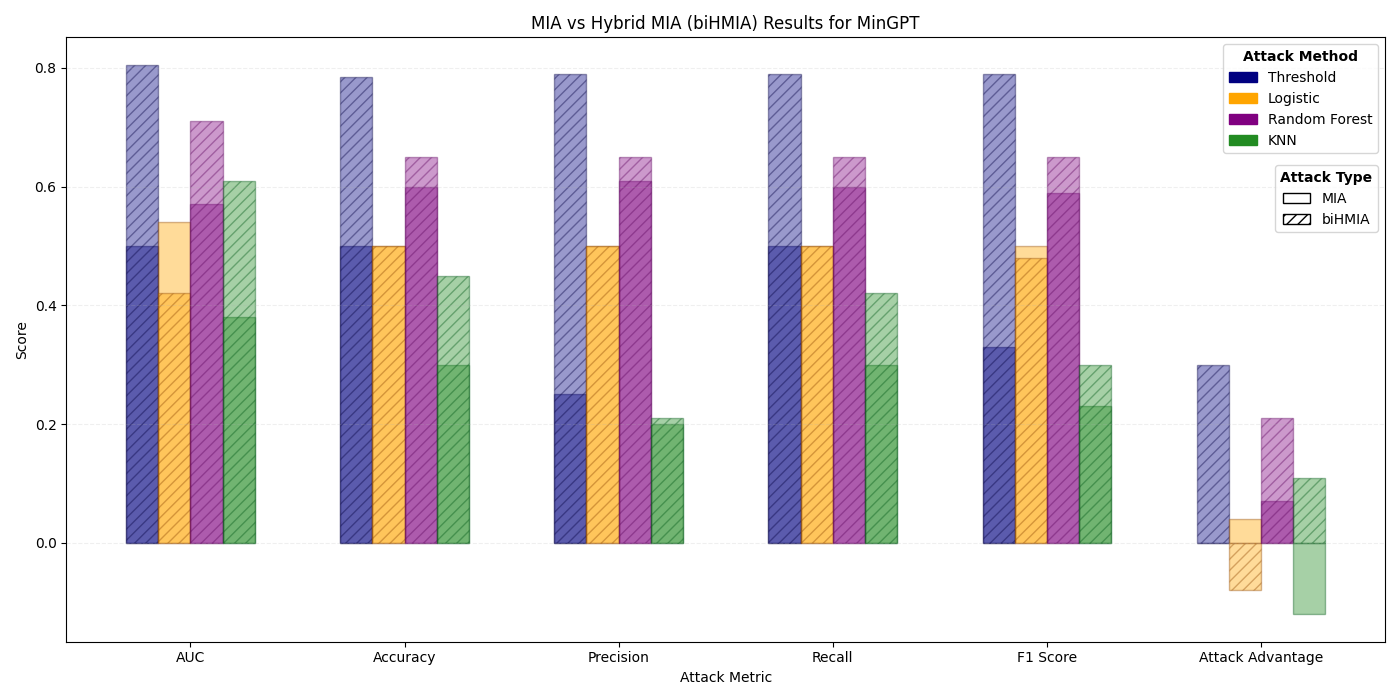

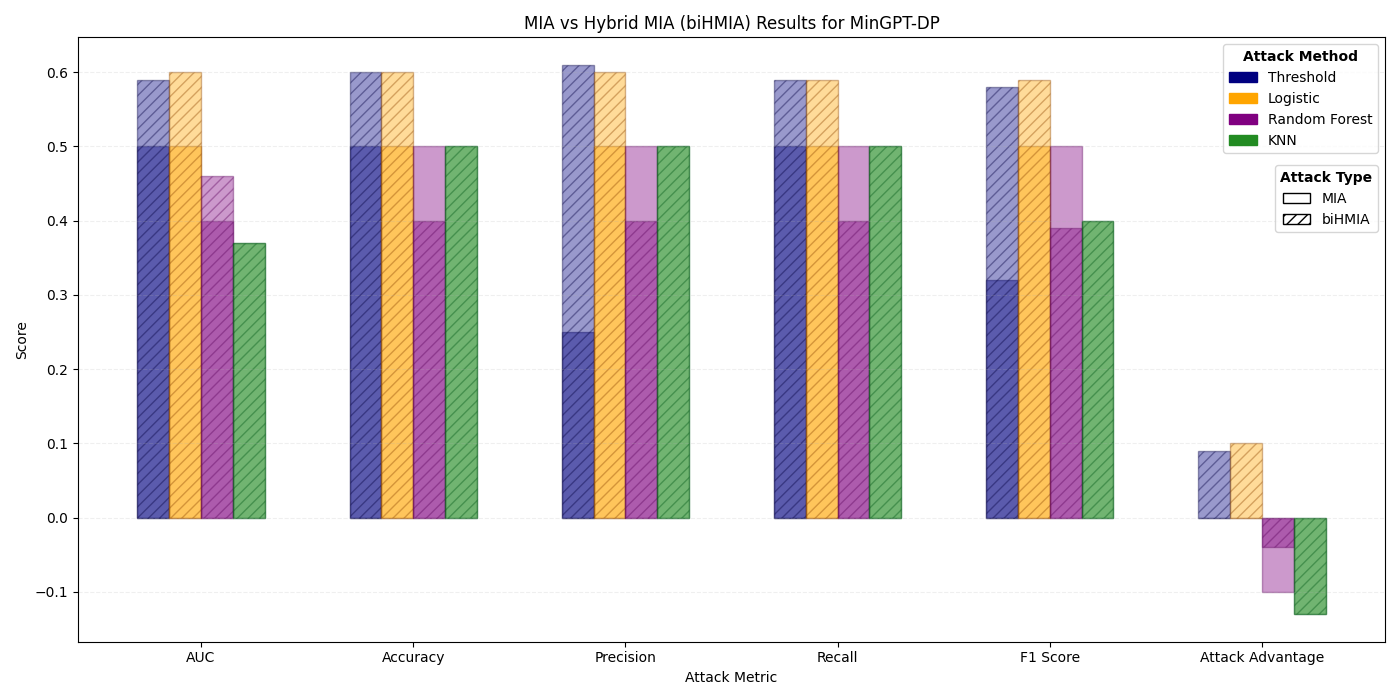

In this research, we propose a sample-level Synthetic Genetic Variant data generation method via Differentially Private Language Models (LM), which applies the mathematically proven privacy guarantees of Differential Privacy (DP) to sample-level genomics. We show that both fine-tuned and custom-trained LMs are viable mock genomic variant generators, and that DP-augmented training successfully leads to a decrease in adversarial attack success. Furthermore, we showed that smaller generative models naturally offer, on average, more robust privacy guarantees compared to larger models without significant decrease in utility.

Additionally, we introduce a tailored privacy assessment framework via a Biologically-Informed “Hybrid” Membership Inference Attack (biHMIA), which combines traditional black box MIA with contextual genomics metrics for enhanced attack power. We show that our hybrid attack leads, on average, to higher adversarial success in non-DP models, and similar scores to traditional MIA on DP-enhanced ones, thus confirming that DP can successfully be leveraged for safer genomic data generation.

Paper

Accepted at the 2026 AAAI Workshop on Shaping Responsible Synthetic Data in the Era of Foundation Models.

Biologically-Informed Hybrid Membership Inference Attacks on Generative Genomic Models

References

[1] Adam D. Marks and Karen K. Steinberg. The Ethics of Access to Online Genetic Databases: Private or Public? American Journal of PharmacoGenomics, 2(3):207–212, 2002.

[2] Jeff Gauthier, Antony T Vincent, Steve J Charette, and Nicolas Derome. A brief history of bioinformatics. Briefings in Bioinformatics, 20(6):1981–1996, November 2019.

[3] Muhammad Naveed, Erman Ayday, Ellen W. Clayton, Jacques Fellay, Carl A. Gunter, Jean-Pierre Hubaux, Bradley A. Malin, and Xiaofeng Wang. Privacy in the Genomic Era. ACM Computing Surveys, 48(1):1–44, September 2015. Publisher: Association for Computing Machinery (ACM).

[4] Florent Guépin, Matthieu Meeus, Ana-Maria Cretu, and Yves-Alexandre de Montjoye. Synthetic is all you need: removing the auxiliary data assumption for membership inference attacks against synthetic data, September 2023.arXiv:2307.01701 [cs].